|

|

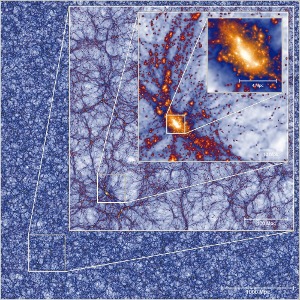

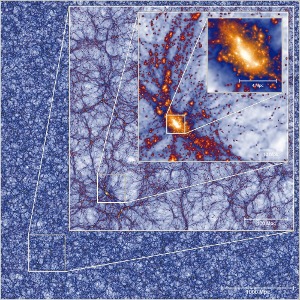

Fig. 1:

The mass density field in the Millennium-XXL focusing on the most

massive halo present in the simulation at z=0. Each inset zooms by a

factor of 8 from the previous one; the side-length varies from 4.1 Gpc

down to 8.1 Mpc. All these images are projections of a thin slice

through the simulation of thickness 8 Mpc.

|

|

|

|

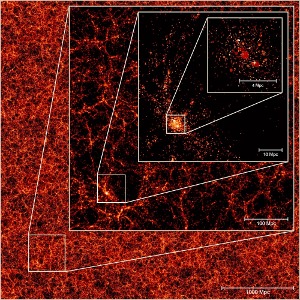

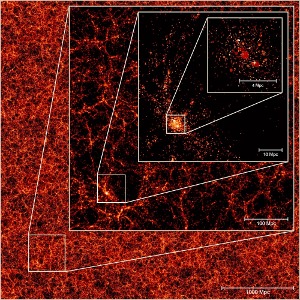

Fig. 2:

The predicted galaxy distribution in the Millennium XXL simulation.

Each galaxy is represented by a sphere, whose intensity and size are related to

the expected total mass in stars and the size of its cold gas disk,

respectively.

|

|  |

Over the last two decades, cosmological numerical simulations have played a

decisive role in establishing the λCDM paradigm as a viable description

of the observable Universe. For instance, they allow astronomers to explore the

impact of the different aspects of this standard model on the spatial

distribution of galaxies, which can then be directly compared with observation

to validate or rule out a particular model. Similarly, such simulations have

proven to be an indispensable tool in understanding the low- and high-redshift

Universe, since they provide the only way to accurately predict the outcome of

non-linear cosmic structure formation.

Scientists at the Max-Planck-Institute for Astrophysics, together with

collaborators in the Virgo consortium, have recently completed the largest

cosmological N-body simulation ever performed. This calculation solved for the

gravitational interactions between more than 300 billion particles over the

equivalent of more than 13 billion years, thus simultaneously making

predictions for the mass distribution in the Universe on very large and very

small scales. Carrying out this computation proved to be a formidable challenge

even on today's most powerful supercomputers. The simulation required the

equivalent of 300 years of CPU time and used more than twelve thousand

computer cores and 30 TB of RAM on the Juropa Machine at the Jülich

Supercomputer Centre in Germany, one of the top 15 most powerful computers in

the world at the time of execution. The simulation generated more than 100 TB

of data products.

This new simulation, dubbed the Millennium-XXL, follows all 6720³ particles

in a cosmological box of side 4.1 Gpc, resolving large-scale structure

with an unprecedented combination of volume and detail. The enormous

statistical power of the simulation is hinted at in Fig 1, which shows the

projected density field on very large scales and for the largest cluster found

at z=0. The simulation has been used to model galaxy formation and

evolution, providing a sample of around 700 million galaxies at low redshift,

whose distribution is displayed in Fig. 2. This allows detailed clustering

studies for rare objects such as quasars or massive galaxy clusters, and also

new ways to physically model observations. In particular, the scale-dependent

relation between galaxies and the underlying dark matter distribution, and the

impact of non-linear evolution on the so called baryonic acoustic oscillations

(BAOs) measured in the power spectrum of galaxy clustering can be addressed in

a fully physical way for the first time.

This work is expected to be crucial in understanding new observational data,

whose aim is to shed light on the nature of the dark energy via measurements of

the redshift evolution of its equation of state. In particular, the arrival of

the largest galaxy surveys ever made is imminent, offering enormous scientific

potential for new discoveries. Experiments like SDSSIII/BOSS or PanSTARRS have

started to scan the sky with unprecedented detail, considerably improving the

accuracy of existing cosmological probes. These experiments, combined with

theoretical effors like the newly performed simulation, will likely lead to

challenges to the standard λCDM paradigm for cosmic structure

formation, and perhaps even to the discovery of new physics.

Raul Angulo & Simon White.

Additional Material

http://galformod.mpa-garching.mpg.de/mxxlbrowser/ http://galformod.mpa-garching.mpg.de/mxxlbrowser/

|