Purpose

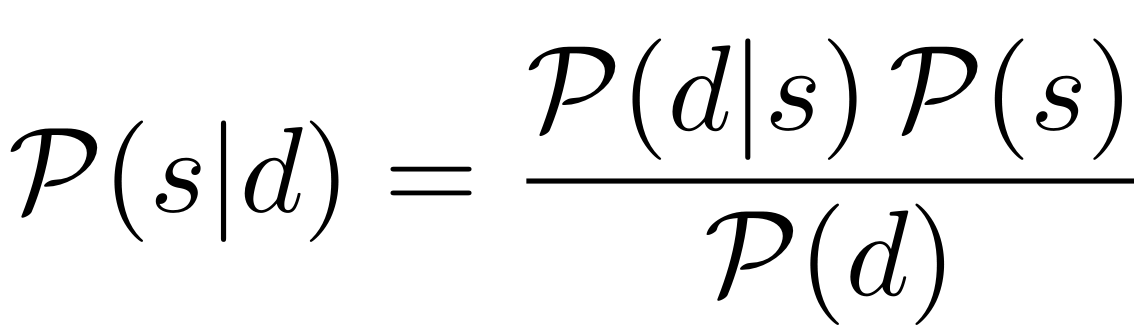

The idea is to bring together people on the Munich Garching Campus interested in applying Bayesian methods and information theory to their research. The emphasis is on astronomy/astrophysics/cosmology but other applications are welcome. Typical applications are model fitting/evaluation, image deconvolution, spectral analysis. More general statistical topics will also be included. Software packages for Bayesian analysis are another focus.

The initial announcement in 2011 led to a large response and currently we have about 170 people signed up on the mailing list. The meetings are very well attended and give us the motivation to pursue this initiative, now in its 6th year. We aim for one meeting per month, but we are flexible. Friday is the preferred day. We welcome suggestions of speakers including self-suggestions! Your views are important and can be shared via our mailing list and at the meetings.

- April 2023: We restart after the pandemic break!

- March 2023: Allen Caldwell leaves the Organizing Committee - Many thanks, Allen, for the great work, support, and spirit during all the years! We welcome Oliver Schultz (MPP) and Lukas Heinrich (TUM) in the Organizing Committee.

- April 2020: We break for the pandemic.

- January 2018: We are pleased to announce the continued support from the Excellence Cluster Universe (TUM) for this year. This backing since 2015 has allowed us to invite high-profile speakers.

- June 2017: Stella Veith left to Irland, we wish her all the best.

- Februrary 2017: We are pleased to announce the continued support from the Excellence Cluster Universe (TUM) for this year. This backing since 2015 has allowed us to invite high-profile speakers.

- February 2017: We welcome Stella Veith (MPA) to help with the organization.

- January 2016: We were pleased to announce the continued support from the Excellence Cluster Universe (TUM) for that year.

- February 2015: We were pleased to report that we have received financial support from the Excellence Cluster Universe (TUM), allowing us to invite more external speakers in future.

Organizers

- Torsten Enßlin (MPA)

- Fabrizia Guglielmetti (ESO)

- Lukas Heinrich (TUM)

- Oliver Schultz (MPP)

- Andy Strong (MPE)

- Udo von Toussaint (IPP)

Mailing list

This is used to inform about meetings, and also can be

used to exchange information between members.

To subscribe/unsubscribe

click

here

Next Talks

- Beyond the null hypothesis: Physics-driven searches for astrophysical neutrino sources

Francesca Capel, Friday July 12 2024 at 14:00 MPP Seminar Room and online

Neutrino astronomy is in an exciting period, with the discovery of astrophysical neutrinos confirmed, but the search for their sources is still ongoing. Recent results show evidence for various possible sources, but few results have crossed the 5-sigma threshold, and the physical interpretation of these results remains challenging. In response to these difficulties, we propose a novel Bayesian approach to the detection of point-like sources of high-energy neutrinos. Motivated by evidence for emerging sources in existing data, we focus on the characterisation and interpretation of these sources, in addition to their discovery. We demonstrate the application of our framework on a simulated data set containing a population of weak sources hidden in typical backgrounds. Even for the challenging case of sources at the threshold of detection, we show that we can constrain key source properties. Additionally, we demonstrate how modelling flexible connections between similar sources can be used to recover the contribution of sources that would not be detectable individually, going beyond what is possible with existing methods.

Past Talks

- Geometric Variational Inference

- A Bayesian bridge between normative theories and biological data

- The Statistics Behind 3D Interstellar Dust Maps of the Milky Way

- Bayesian reconstruction of unobserved states in dynamical systems

- Bayesian radio interferometric calibration and imaging

- Uncertainty Quantification for 3D Magnetohydrodynamic Equilibrium Reconstruction

- Fundamental physics without spacetime: ideas, results and challenges from quantum gravity and beyond

- Disentangling the Fermi LAT sky - Multicomponent gamma-ray imaging in the spatio-spectral domain

- Cosmoglobe - mapping the sky from the Milky Way to the Big Bang

- Commander – lessons learned from two decades of global Bayesian CMB analysis

- Hierarchical Bayesian Models: What are they? Examples from Astrophysics

- A decade of information field theory 28 April 15:30, ESO Garching and online: Torsten Enßlin (MPA)

- A Bayesian approach to derive the kinematics of gravitationally lensed galaxies

- Visibility-space Bayesian forward modeling of radio interferometric gravitational lens observations

- Gauge Equivariant Convolutional Networks

- Data science problems and approaches at the IceCube Neutrino Observatory

- Metric Gaussian Variational Inference

- Bayesian population studies of short gamma-ray bursts.

- On Model Selection in Cosmology

- 2D Deconvolution with Adaptive Kernel.

- Probabilistic Linear Solvers - Treating Approximation as Inference.

- Jaynes's principle and statistical mechanics

- A hierarchical model for the energies and arrival directions of ultra-high energy cosmic rays (UHECR)

- UBIK - a universal Bayesian imaging toolkit

- Bayesian Probabilistic Numerical Methods

- Probabilistic Numerics — Uncertainty in Computation

- Collaborative Nested Sampling for analysing big datasets

- Metrics for Deep Generative Models

- 3D Reconstruction and Understanding of the Real World

- Adaptive Harmonic Mean Integration and Application to Evidence Calculation

- On the Confluence of Deep Learning and Proximal Methods

- Exponential Families on Resource-Constrained Systems

- The rationality of irrationality in the Monty Hall problem

- ROSAT and XMMSLEW2 counterparts using Nway--An accurate algorithm to pair sources simultaneously between N catalogs

- Bayesian calibration of predictive computational models of atoarterial growth

- Variational Bayesian inference for stochastic processes

- What does Bayes have to say about tensions in cosmology and neutrino mass hierarchy?

- Uncertainty analysis using profile likelihoods and cprofileisposteriors

- Uncertainties of Monte Carlo radiative-transfer -simulations

- A Global Bayesian Analysis ofparse but prior information is avaiNeutrinoparse but prior information is avaiMass Data

- A new method for inferring the 3D matter distribution from cosmicckshearbldata

- Phase-space reconstruction of the cosmic large-scale structure

- Field dynamics via approximative Bayesian reasoning

- Surrogate minimization in high dimensions

- Accurate Inference with Incomplete Information

- Simultaneous Bayesian Location and Spectral Analysis of Gamma-Ray Bursts

- Bayesian methods in the search for gravitational waves

- An introduction to QBism

- Statistical Inference in Radio Astronomy: Bayesian Radio Imaging with the RESOLVE package

- Galactic Tomography

- Modern Probability Theory

- Rethinking the Foundations

- Bayesian Multiplicity Control

- Learning Causal Conditionals

- Confidence and Credible Intervals

- Bayes vs frequentist: why should I care?

- Dynamic system classifier

- Bayesian Reinforcement Learning

- PolyChord: next-generation nested sampling

- The Gaussian onion: priors for a spherical world

- Approximate Bayesian Computing (ABC) for model choice

- Bayesian tomography

- Stellar and Galactic Archaeology with Bayesian Methods

- Supersymmetry in a classical world: new insights on stochastic dynamics from topological field theory

- Bayesian regularization for compartment models in_imaging

- Approximate Bayesian Computation in Astronomy

- p-Values for Model Evaluation

- Uncertainty quantification for computer models

- A Bayesian method for high precision pulsar timing - Obstacles, narrow pathways and chances

- Information field dynamics

- On the on/off problem

- Bayesian analyses in B-meson physics

- Calibrated Bayes for X-ray spectra

- Unraveling general relationships in multidimensional datasets

- Probabilistic image reconstruction with radio interferometers

- Turbulence modelling and control using maximum entropy 2principles

- DIP: Diagnostics for insufficiences of posterior calculations - a CMB application

- Bayesian modelling of regularized and gravitationally lensed sources

- Hierarchical modeling for astronomers

- Photometric Redshifts Using Random Forests

- Bayesian search for other Earths: low-mass planets around nearby M dwarfs

- The NIFTY way of Bayesian signal inference

- Probability, propensity and probability of propensity values

- Data analysis for neutron spectrometry with liquid scintillators: applications to fusion diagnostics

- Surrogates

- Bayesian Cross-Identification in Astronomy

- Recent Developments and Applications of Bayesian Data Analysis in Fusion Science

- Large Scale Bayesian Inference in Cosmology

- Bayesian mixture models for background-source separation

- Photometric redshifts

- The uncertain uncertainties in the Galactic Faraday sky

- The Bayesian Analysis Toolkit - a C++ tool for Bayesian inference

- Beyond least squares

- Signal Discovery in Sparse Spectra - a Bayesian Analysis

- Bayesian inference in physics

- FIRST MEETING: Introduction to Bayes Forum and Information field theory - turning data into images

Philipp Frank, Friday June 14 2024 at 14:00 MPA room E0.11 and online

Many inference tasks in observational astronomy take the form of reconstruction problems where the underlying quantities of interest are fields (functions of space, time and/or frequency) that have to be recovered from noisy and incomplete observational data. These problems are in general ill-posed, as many different field configurations may be consistent with the observed data. Their solutions take the form of very high dimensional, non-Gaussian posterior probability distributions in general, and accessing the information in them poses a significant challenge. Geometric Variational Inference is one robust and scalable way to access this information approximately. It has proven to be both, accurate and scalable enough to solve various real world astrophysical inverse problems, ranging from the radio up to the gamma-ray regime.

Wiktor Mlynarski, Friday May 24 2024 at 14:00 MPA room E0.11 and online

Normative theories and statistical inference provide complementary approaches for the study of biological systems. A normative theory postulates that organisms have adapted to efficiently solve essential tasks and derives parameters that maximize the hypothesized organismal function ab-initio , without reference to experimental data. In contrast, statistical (and in particular, Bayesian) inference focuses on efficient utilization of data to learn model parameters, without reference to any a priori notion of biological function. Traditionally, these two approaches were developed independently and applied separately. In this talk I will discuss how the synthesis of normative theories and data analysis can generate new insights into biological complexity. First, to highlight the significance of optimality theories, I will present a recently developed theory of adaptive sensory coding in dynamic environments. Second, I will demonstrate how normative and statistical approaches can be unified into a coherent Bayesian framework that embeds a normative theory into a family of maximum-entropy ‘’optimization priors’’. Through the talk I will use examples from neuroscience. Our approach is, however, substrate independent, and can be applied across different domains of biology.

Gordian Edenhofer, Thursday April 11 2024 at 14:00 MPA room E0.11 and online

Interstellar dust traces the mass in the ISM and is a crucial catalyst for star formation. To study the structure of the ISM and the conditions for star formation, we need to study the distribution of dust in 3D. However, we only observe interstellar dust in 2D projections. Reconstructing the 3D distribution of dust is a computationally intensive statistical inversion problem. The statistical methodology dictates the spatial resolution and dynamic range of the reconstructed map and is the main distinguishing factor between 3D dust maps. I will discuss the basic principle underlying 3D dust mapping and the spatial smoothness priors at their core. I will give an overview of Bayesian methods currently used for 3D dust mapping with a special focus on methodology of the Edenhofer+23 3D dust map.

Alexander Alberts (Purdue University, USA), Thursday (!) March 28 2024 at 14:00 MPA room E0.11 and online

We demonstrate how unobserved fields in dynamical systems can be reconstructed through information field theory. In this Bayesian approach, we define an infinite-dimensional prior over the space of field configurations which is constrained on the dynamics. Additionally, we calibrate the parameters contained in the dynamics. Access to measurements of one state is sufficient to reconstruct the unobserved fields. We apply the methodology to an application where we model the temperature dynamics of a diesel combustion engine component.

Jakob Roth (MPA), March 1 2024 at 14:00 MPP (in Garching!) and online

Reconstructing the sky brightness from radio interferometric data is an inverse problem. As the data is usually incomplete and corrupted by observational effects, there is no unique solution to this inverse problem. This talk will present the RESOLVE algorithm for solving the imaging inverse problem in a probabilistic setting. More specifically, I will discuss how RESOLVE computes an estimate of the posterior probability distribution of possible sky images and data corruption effects. Furthermore, I will compare images obtained with RESOLVE to results from other algorithms. (talk as pdf)

Robert Koeberl (IPP), January 19 2024 at 14:00 CET at IPP in building D2 and online

A frequent starting point in the analysis and numerical modelling of physics in magnetically confined plasmas is the calculation of a magnetohydrodynamic (MHD) equilibrium from plasma profiles. Profiles, and therefore the equilibrium, are typically reconstructed from experimental data. What sets equilibrium reconstruction apart from usual inverse problems is that profiles are given as functions over a magnetic flux derived from the magnetic field, rather than spatial coordinates. This makes it a fixed-point problem that is traditionally left inconsistent or solved iteratively in a least-squares sense. To enable consistent uncertainty quantification for reconstructed 3D MHD equilibria, we propose a probabilistic framework that constructs a prior distribution of equilibria from a physics motivated prior of plasma profiles. Principal component analysis is utilized to find a low dimensional description of the equilibria and the associated prior distribution. This low dimensional representation enables the training of a surrogate of the forward model, which in turn allows a fast evaluation of the likelihood function in a Bayesian inference setting. Since the inference is performed on equilibrium parameters, the corresponding profiles can be calculated directly, guaranteeing consistency.

Daniele Oriti (LMU), 8 December 2023 14:00 (CET), MPA Lecture Hall E.0.11 and online

I introduce the problem of quantum gravity and the idea of space and time being emergent, non-fundamental notions, with usual spacetime physics being recovered on in a suitable approximation of a non-spatiotemporal fundamental theory. I then describe a concrete example of such non-spatiotemporal fundamental formalism (tensorial group field theory), in which the universe is a peculiar quantum many-body system of "atoms of space", and of the procedure leading, in an hydrodynamic approximation, to an effective cosmological dynamics formulated in the usual spacetime language, with some striking results. I also outline some elements of a possible quantum statistical foundation of the formalism based on Jaynes' principle, which proves general enough to be used in such non-spatiotemporal context. I conclude with some remarks about foundational (and philosophical) issues raised by the idea of space and time not being fundamental, regarding the interpretation of quantum mechanics, the characterization of laws of nature, and agency. (talk as pdf)

24 November 15:30 coffee, 16:00 talk (CET), MPA Lecture Hall E.0.11 and online: Lukas Scheel-Platz (Institute of Biological and Medical Imaging, Helmholtz Zentrum München)

The gamma-ray sky recorded by the Fermi Large Area Telescope (LAT) is a superposition of emissions from many processes. To study them, a rich toolkit of analysis methods for gamma-ray observations has been developed, most of which rely on emission templates to model foreground emissions. In a recent publication my collaborators and me complemented these methods by presenting a template-free spatio-spectral imaging approach for the gamma-ray sky, based on a phenomenological modeling of its emission components. It is formulated in a Bayesian variational inference framework and allows a simultaneous reconstruction and decomposition of the sky into multiple emission components, enabled by a self-consistent inference of their spatial and spectral correlation structures. Additionally, we presented the extension of our imaging approach to template-informed imaging, which includes adding emission templates to our component models while retaining the “data-drivenness” of the reconstruction. In this talk, I will introduce the above-mentioned models, analyze the obtained data-driven reconstructions, and critically evaluate the performance of the models, highlighting strengths, weaknesses, and potential improvements. (talk as pdf)

21 September 13:30 (CEST), MPA Lecture Hall E.0.11 and online: Ingunn Kathrine Wehus (Oslo U. and Caltech)

The cosmic microwave background (CMB) gives us information about the earliest history of the Universe, close after the Big Bang. After half a century of more and more sensitive CMB observations, from ground, space and balloons, we now have dozens of valuable data sets available. Each of these has their own strengths and weaknesses, including sensitivity, resolution, frequency bands, sky fraction and systematics. Traditionally each experiment has been analyzed separately, which means that one is blind to the modes not observed by that particular instrument. When instead analyzing them jointly, they will break each other's degeneracies. Another benefit of joint analysis is that more data allows you to model and constrain the CMB and the foreground emissions from our own galaxy at the same time, which is needed to separate the different components and get the best constraint for the cosmological parameters. This type of joint global analysis is what the Cosmoglobe effort is all about. (talk as pdf)

21 September 14:00 (CEST), MPA Lecture Hall E.0.11 and online: Hans Kristian Eriksen (Inst. Theor. Astrophys., Oslo)

As the signal-to-noise ratio of available CMB data increases, it becomes more and more important to take into account all relevant effects at the same time, and in this talk I will present one specific framework that addresses this challenge head-on through global Bayesian modelling. This already played a major role in the analysis of ESA's Planck mission, and it is likely to play an even bigger role in next-generation experiments, as the importance of systematic uncertainties continue to increase. Furthermore, the fundamental lessons learned from this line of work are likely to be of interest to a wide range of other cosmological and astrophysical experiments as their sensitivity also continue to increase. (talk as pdf)

26 May 16:30, MPE New Seminar Room X5 1.1.18a and online: Michael Burgess (MPE)

Many modeling problems in physics have both local and population parameters which we would like to infer from observed data. As physicists, we have come up with clever ways to solve for both. However, statisticians also have looked at these problems and developed formal frameworks for them. These can be scary… and look a bit boring, but hierarchical modeling allows for a natural and statistically robust way of inferring both without resorting to asymptotic short cuts that can damage the underlying connection between individual observations and their linked population distributions. I will show some toy and real problems in astrophysics as a guide to help making hierarchical modeling. (talk as pdf)

Information field theory (IFT) is information theory for fields. IFT is concerned with the non-parametric reconstruction off physical signals from data in a probabilistic, Bayesian manner. IFT has numerous applications inf astrophysics, particle physics, and other fields. A decade after my first talk on IFT at the Bayes Forum in 2011, I would like to report on the progress that has been made since then. Progress has been made in the didactic presentation of IFT (which I hope will be demonstrated by this talk), in its numerical application (from a maximum-a posteriori approximation to sophisticated variational inference schemes), in model complexity allowing algorithms to self-tune (using hyper-hyper-priors), in understanding its relationship to machine learning (ITF is AI), and in its application (in 2D, 3D, and 4D). (talk as pdf)

31 January 2020 at MPA: Francesca Rizzo (MPA)

The kinematics of high redshift galaxies reveals important clues on their formation and assembly, providing also strong constraints on galaxy formation models. However, the studies of high-z galaxies, based on IFU observations, have the limitation of the spatial resolution. The Point Spread Function of the instrument has strong effects on the extraction of the kinematic maps, i.e. the velocity field and the velocity dispersion field. Gravitational lensing provides a unique tool to study the internal motions of galaxies, thanks to the increase sensitivity and angular resolution that is provided by the high magnifications. The combination of the magnifying power of gravitational lensing and the capabilities of adaptive>f) optics or interferometric/p> arrays enable to obtain observations with higher spatial resolution and better sampling than is otherwise possible. I will present a new code that derives the rotation curves of lensed galaxies, using 3D tilted ring model. This software takes advantage of the redundant information contained in lensed data and it recovers reliable kinematics from a variety of different instruments including the new-generation IFUs and interferometric arrays (e.g., ALMA). I will describe the structure of the main algorithm and show that it performs much better than the standard 2D technique usually used for the extraction of the kinematic maps of high-z galaxies. Finally, I will discuss the application of this software on ALMA observations of the [CII] line from a lensed starburst galaxy at z=4.2.

31.1.2020. 14:00t MPA New Seminar Room: Devon Powell (MPA)

Gravitational lensing by galactic potentials is a powerful tool with which to probe the abundance oft low-mass dark matter structures in the universe. Dark matter substructures or line-of-sight haloes introduce small scale perturbations to the smooth lensing potential. By observing detections (or non-detections) of such low-mass perturbers in lensed systems, we can place constraints on the halo mass function and differentiate between different particle models for dark matter. The lowest detectable perturber mass is related to the angular resolution of the observation via its Einstein radius. Therefore, high angular resolution observations can place the strongest constraints on the low end of the halo mass function. In this talk, I will discuss the development of a fully Bayesian gravitational lens modeling code that directly fits large radio interferometric datasets from the Global Very Long Baseline Interferometry array (GVLBI). While the angular resolution is excellent (sufficient to detect haloes down to 10^6 solar masses at z~0.5-1.0), these data contain large numbers (>10^9) of visibilities and therefore present unique computational challenges for modeling. I will present the computational methods developed for this modeling process as well as results from GVLBI observations of the lensed radio jet MGgJ0751+2716.g

special Bayes Forum supported talk at the Ludwig Maximilians University on Tuesday, November 26 at 12:15: Maurice Weiler (University of Amsterdam)

The idea of equivariance to symmetry transformations provides one of the first theoretically grounded principles for neural network architecture design. In this talk we focus on equivariant convolutional networks for processing spatiotemporally structured signals like images or audio. We start by demanding equivariance w.r.t. global symmetries of the underlying space and proceed by generalizing the resulting design principles to local gauge transformation, thereby enabling the development of equivariant convolutional networks general manifolds. Defining its feature spaces as spaces of feature fields, the theory of Gauge Equivariant Convolutional Networks shows intriguing parallels with fundamental theories in physics, in particular with the tetrad formalism of GR. In order to emphasize the coordinate free fashion of gauge equivariant convolutions, we briefly discuss their formulation in the language of fiber bundles. Beyond unifying several lines of research in equivariant and geometric deep learning, Gauge Equivariant Convolutional Networks are demonstrated to yield greatly improved performances compared to conventional CNNs in a wide range of signal processing tasks.

October 25th 2019, 14:00, new seminar room, MPA: Philipp Eller (Origins Data Science Laboratory, Excellence cluster ORIGINS)

At the South Pole, in the middle of Antarctica, we have drilled deep holes down into the glacial ice to instrument a cubic kilometer of this highly transparent and pristine medium with very sensitive light sensors. This detector called “IceCube” allows us to capture the dim trace of light that some neutrinos leave behind when they interact in the ice. In order to use the collected raw data to answer scientific questions, a number of challenging data analysis problems need to be addressed. In this talk I will highlight some of the problems and solutions that I encounter in my personal research of neutrino oscillation physics. We will dive into the topics of event reconstruction and high statistics data analysis with small signals, discuss our current approaches and open issues.

July 5th 2019, 14:00, Room 401 - Old Seminar room at MPA: Jakob Knollmüller (MPA)

A variational Gaussian approximation of the posterior distribution can be an excellent way to infer posterior quantities. However, to capture all posterior correlations the parametrization of the full covariance is required, which scales quadratic with the problem size. This scaling prohibits full-covariance approximations for large-scale problems. As a solution to this limitation we propose Metric Gaussian Variational Inference (MGVI). This procedure approximates the variational covariance such that it requires no parameters on its own and still provides reliable posterior correlations and uncertainties for all model parameters. We approximate the variational covariance with the inverse Fisher metric, a local estimate of the true posterior uncertainty. This covariance is only stored implicitly and all necessary quantities can be extracted from it by independent samples drawn from the approximating Gaussian. MGVI requires the minimization of a stochastic estimate of the Kullback-Leibler divergence only with respect to the mean of the variational Gaussian, a quantity that scales linearly with the problem size. We motivate the choice of this covariance from an information geometric perspective. We validate the method against established approaches, demonstrate its scalability into the regime over a million parameters and capability to capture posterior distributions over complex models with multiple components and strongly non-Gaussian prior distributions. (arXiv:1901.11033)

May 31st 2019, 14:00: New Seminar room at MPA:: Michael Burgess (MPE)

Inferring the number, rate and intrinsic properties of short gamma-ray bursts has been a long studied problem in the field. As it is closely related to the number of GW events expected for neutron star mergers, the topic has begun to be discussed int he literature again. However, the utilized techniques for GRBs still rely on improper statistical modeling V/Vmax estimators and in many cases, methods made for humor alone. I will discuss the use of Bayesian hierarchal models to infer population and object level parameters of inhomogeneous-Poisson process distributed populations. Techniques to handle high-dimensional selections effects will be introduced. The methodology will then be applied to sGRB population data with the aim of understand how many of these objects there are, where they are in the Universe and what are their properties under given modeling assumptions. The methodology is general, thus extensions to other populations can be made easily.

May 3rd 2019,ade ea14:00: New Seminar room at MPA: Martin Kerscher (LMU Munich)

I will review some of the common methods for model selection: the goodness of fit, the likelihood ratio test, Bayesian model selection using Bayes factors, and the classical as well as the Bayesian information theoretic approaches. I will illustrate these different approaches by comparing models for the expansion history of the Universe. I will highlight the premises and objectives entering these different approaches to model selection and finally recommend the information theoretic approach. (talk as pdf)

March 29th 2019, 14:00: New Seminar room at MPA: Dirk Nille (MPI für Plasmaphysik)

Adaptive Kernel proved to be valuable in the evaluation of 1D profiles of positive additive distributions in inverse problems. This includes modelling photon fluxes as well as heat fluxes from plasma to the surrounding wall in fusion research. This multi-resolution concept is extended to 2D evaluation, aiming at inverse problems arising in the analysis of image data. Approaches for tackling the problem with optimisation routines as well as "classic" Random Walk and Hamiltonian Monte Carlo integration are presented.

March 28th 2019, 14:00: New Seminar room at MPA: Simon Bartels (MPI for Intelligent Systems Tübing)

Linear equation systems (Ax=b) are at the core of many, more sophisticated numerical tasks. However, for large systems (e.g. least-squares problems in Machine Learning) it becomes necessary resort to approximation. Typical approximation algorithms for linear equation systems tend to return only a point estimate of the solution but little information about its quality (maybe a residual or a worst-case error bound). Probabilistic linear solvers aim to provide more information in form of a probability distribution over the solution. This talk will show how to design efficient probabilistic linear solvers, how they are connected to classic solvers and how preconditioning can be seen from an inference perspective.

January 11th 2019, 14:00: New Seminar room at MPA: Ulrich Schollwöck (LMU Munich)

Abstract: In this talk I will review Jaynes’ derivation of equilibrium statistical mechanics based on his celebrated principle. I will address certain subtleties arising for the classical and quantum-mechanical treatment respectively and discuss some of the arguments raised against his derivation. talk as pfd

November 16h 14:00: New Seminar room at MPA: Francesca Capel (KTH - Sweden)

Abstract: The study of UHECR is challenged by both the rarity of events and the difficulty in modelling their production, propagation and detection. The physics behind these processes is complicated, requiring high-dimensional models which are impossible to fit to data using traditional methods. I present a Bayesian hierarchical model which enables a joint fit of the UHECR energy spectrum and arrival directions with a physical model of UHECR phenomenology. In this way, possible associations with potential astrophysical sources can be assessed in a physically and statistically principled manner. The importance oflwoeck.including the UHECR energies is demonstrated through simulations and results from the application of the model to data from the Pierre Auger observatory are shown. The potential to extend this framework to more realistic physical models and multi-messenger observations is also discussed.

November 9th 14:00: New Seminar room at MPA: Jakob Knollmüller (MPA Garching)

Abstract: The abstract problem of recovering signals from data is at the core of any scientific progress. In astrophysics, the signal of interest is the Universe around us. It is varying in space, time and frequency and it is populated by a large variety of phenomena. To capture some of its aspects, large and complex instruments are build. How to combine their information consistently into one picture? UBIK allows to fuse data from multiple instruments within one unifying framework. A joint reconstruction of multiple instruments can provide a deeper picture of the same object, as it becomes far easier to distinguish between the signal and instrumental effects. Underlying to UBIK is variational Bayesian inference, allowing uncertainty quantification for parameters jointly. The first incarnation of UBIK demonstrates the versatility of this approach with a number of examples.

October 12th 14:00: New Seminar room at MPA: TimumericalSullivanumerical(Free University of Berlin and Zuse Institute Berlin)

Abstract: Numerical computation --- such as numerical solution of a PDE --- can modelled as a statistical inverse problem in its own right. The popular Bayesian approach to inversion is considered, wherein a posterior distribution is induced over the object of interest by conditioning a prior distribution on the same finite information that would be used in a classical numerical method, thereby restricting attention to a meaningful subclass of probabilistic numerical methods distinct from classical average-case analysis and information-based complexity. The main technical consideration here is that the data are non-random and thus the standard Bayes' theorem does not hold. General conditions will be presented under which numerical methods based uponnstitutsuch Bayesian probabilistic foundations are well-posed, and a sequential Monte-Carlo method will be shown to provide consistent estimation of the posterior. The paradigm is extended to computational "pipelines", through which a distributional quantification of numerical error can be propagated. A sufficient condition is presented for when such propagation can be endowed with a globally coherent Bayesian interpretation, based on a novel class of probabilistic graphical models designed to represent a computational work-flow. The concepts are illustrated through explicit numerical experiments involving both linear and non-linear PDE models.

September 21st 14:00, New Seminar room at MPA: Philipp Hennig (University Tübingen)

The computational complexity of inference from data is dominated by the solution of non-analytic numerical problems (large-scale linear algebra, optimization, integration, the solution of differential equations). But a converse of sorts is also true — numerical algorithms for these tasks are inference engines! They estimate intractable, latent quantities by collecting the observable result of tractable computations. Because they also decide adaptively which computations to perform, these methods can be interpreted as autonomous learning machines. This observation lies at the heart of the emerging topic of Probabilistic Numerical Computation, which applies the concepts of probabilistic (Bayesian) inference to the design of algorithms, assigning a notion of probabilistic uncertainty to the result even of deterministic computations. I will outline how this viewpoint is connected to that of classic numerical analysis, and show that thinking about computation as inference affords novel, practical answers to the challenges of large-scale, big data, inference. talk as pfd

September 12st 14:00, New Seminar room at MPA: Johannes Buchner (PUC, Chile)

The data torrent unleashed by current and upcoming astronomical surveys demands scalable analysis methods. Machine learning approaches scale well. However, separating the instrument measurement from the physical effects of interest, dealing with variable errors, and deriving parameter uncertainties is usually an after-thought. Classic forward-folding analyses with Markov Chain Monte Carlo or Nested Sampling enable parameter estimation and model comparison,even for complex and slow-to-evaluate physical models. However, these approaches require independent runs for each data set, implying an unfeasible number of model evaluations in the Big Data regime. Here I present a new algorithm, collaborative nested sampling, for deriving parameter probability distributions for each observation. Importantly,the number of physical model evaluations scales sub-linearly with the number of data sets, and no assumptions about homogeneous errors, Gaussianity, the form of the model or heterogeneity/completeness of the observations need to be made. Collaborative nested sampling has application in speeding up analyses of large surveys, integral-field-unit observations,and Monte Carlo simulations. preprint: arXiv:1707.04476

July 27th: New Seminar room at MPA: Patrick van der Smagt (AI Research Lab, Volkswagen Munich, Germany)

Abstract: Neural networks have advanced to excellent candidates for probabilistic inference. In combination with variational inference, a powerful tool ensues with which efficient generative models can represent probability densities, preventing the need for sampling. Not only does this lead to better generalisation, but also can such models be used to simulate highly complex dynamical systems. In my talk I will explain how we can predict time series observations, and use those to obtain efficient approximations to optimal control in complex agents. These unsupervised learning methods are demonstrated for time series modelling and control in robotic and other applications.

July 6th 14:00, New Seminar room at MPA: Matthias Niessner (TUM)

In this talk, I will cover our latest research on 3D reconstruction and semantic scene understanding. To this end, we use modern machine learning techniques, in particular deep learning algorithms, in combination with traditional computer vision approaches. Specifically, I will talk about real-time 3D reconstruction using RGB-D sensors, which enable us to capture high-fidelity geometric representations of the real world. In a new line of research, we use these representations as input to 3D Neural Networks that infer semantic class labels and object classes directly from the volumetric input. In order to train these data-driven learning methods, we introduce several annotated datasets, such as ScanNet and Matterport3D, that are directly annotated in 3D and allow tailored volumetric CNNs to achieve remarkable accuracy. In addition to these discriminative tasks, we put a strong emphasis on generative models. For instance, we aim to predict missing geometry in occluded regions, and obtain completed 3D reconstructions with the goal of eventual use in production applications. We believe that this research has significant potential for application in content creation scenarios (e.g., for Virtual and Augmented Reality) as well as in the field of Robotics where autonomous entities need to obtain an understanding of the surrounding environment.

June 29 14:00, Room 313, Max Planck Institute for Physics, Föhringer Ring 6, Munich: Rafael Schick (TUM & MPP)

Evaluating the integral of a density function, e.g. to calculate the Bayes Factor, can computationally be very costly or even impossible, all the more if the density function is complicated in that it is multi-modal or high-dimensional. However, if one has already generated samples from such a density function, e.g. by using a Markov Chain Monte Carlo algorithm, then the harmonic mean integration provides a technique that can directly utilize such samples in order to calculate an integration estimate. By restricting the harmonic mean integration to subvolumes one can avoid the well-known problem of having an infinite variance of the harmonic mean estimator.

June 1st 14:00, Old Seminar room at MPA: Daniel Cremers (TUM)

While numerous low-level computer vision problems such as denoising, deconvolution or optical flow estimation were traditionally tackled with optimization approaches such as proximal methods, recently deep learning approaches trained on numerous examples demonstrated impressive and sometimes superior performance on respective tasks. In my presentation, I will discuss recent efforts to bring together these seemingly very different paradigms, showing how deep learning can profit from proximal methods and how proximal methods can profit from deep learning. This confluence allows to boost deep learning approaches both in terms of drastically faster training times as well as substantial generalization to novel problems that differ from the ones they were trained for (generalization / domain adaptation).

4 May 2018 14:00 New Seminar room at MPA: Nico Piatkowski (University Dortmund)

Abstract: In order to give preference to a particular solution with desirable properties, a regularization term can be included in optimization problems. Particular types of regularization help us to solve ill-posed problems, avoid overfitting of machine learning models, and select relevant (groups of) features in data analysis. In this talk, I demonstrate how regularization identifies exponential family members with reduced computational requirements. More precisely, we will see how to (1) reduce the memory requirements of time-variant spatio-temporal probabilistic models, (2) reduce the arithmetic requirements of undirected probabilistic models, and (3) connect the parameter norm to the complexity of probabilistic inference to derive a new quadrature-based inference procedure.

talk as pdf

23 March 2018: New Seminar room at MPA: Torsten Enßlin and Margret Westerkamp (MPA)

Abstract: The rational solution of the Monty Hall problem unsettles many people. Most people, including us, think it feels wrong to switch the initial choice of one of the three doors, despite having fully accepted the mathematical proof for its superiority. Many people, if given the choice to switch, think the chances are fifty-fifty between their options, but still strongly prefer to stick to their initial choice. Is there some rationale behind these irrational feelings?

preprint

12 January 2018 14:00 New Seminar MPA: Mara Salvato (MPE)

Abstract: The increasing number of surveys available at any wavelength is allowing the construction of Spectral Energy Distribution (SED) for any kind of astrophysical object. However, a) different surveys/instruments, in particular at X-ray, UV and MIR wavelength, have different positional accuracy and resolution and b) the surveys depth do not match each other and depending on redshift and SED, a given source might or might not be detected at a certain wavelength. All this makes the pairing of sources among catalogs not trivial, specially in crowded fields. In order to overcome this issue, we propose a new algorithm that combine the best of Bayesian and frequentist methods but that can be used as the common Likelihood Ratio (LR) technique in the simplest of the applications. In this talk I will introduce the code and how it has been use for finding the ALLWISE counterparts to the X-ray ROSAT and XMMSLEW2 All-sky surveys.

talk as pdf

15 December 2017 14:00 New Seminar room at MPA: Sebastian Kehl (MPA)

Abstract: Measurement data used in the calibration of complex nonlinear computational models for the prediction of growth of abdominal aortic aneurysms (AAAs) - expanding balloon-like pathological dilations of the abdominal aorta, the prediction of which poses a formidable challenge in the clinical practice - is commonly available as sequence of clinical image data (MRT/CT/US). This data represents the Euclidian space in which the model is embedded as a submanifold. The necessary observation operator from the space of images to the model space is not straightforward and prone to the incorporation of systematic errors which will affect the predictive quality of the model. To avoid these errors, the formalism of surface currents is applied to provide a systematic description of surfaces representing a natural description of the computational model. The formalism of surface currents furthermore provides a convenient formulation of surfaces as random variables and thus allows for a seamless integration into a Bayesian formulation. However, this comes at an increased computational cost which adds to the complexity of the calibration problem induced by the cost for the involved model evaluations and the high stochastic dimension of the parameter spaces. To this end, a dimensionality reduction approach is introduced that accounts for a priori information given in terms of functions with bounded variation. This approach allows for the solution of the calibration problem via the application of advanced sampling techniques such as Sequential Monte Carlo.

13 October 2017 14:00 New Seminar room at MPA: Manfred Opper (TU Berlin)

Abstract: Variational methods provide tractable approximations to probabilistic and Bayesian inference for problems where exact inference is not tractable or Monte Carlo sampling approaches would be too time consuming. The method is highly popular in the field of machine learning and is based on replacing the exact posterior distribution by an approximation which belongs to a tractable family of distributions. The approximation is optimised by minimising the Kullback—Leibler divergence between the distributions. In this talk I will discuss applications of this method to inference problems for stochastic processes, where latent variables are very high- or infinite dimensional. I will illustrate this approach on three problems: 1) the estimation of hidden paths of stochastic differential equations (SDE) from discrete time observations, 2) the nonparametric estimation of the drift function of SDE and 3) the analysis of neural spike data using a dynamical Ising model.

talk as pdf

22 September 2017: 14:00 New Seminar room a Licia Verde (Univ. Barcelona)

Abstract: The Bayesian approach has been the standard one int MPA: cosmology for the analysis of all major recent datasets. I will present at MPA: Bayesian approach to two open issues. One is the tension between different datat MPA: sets and in particular the value of the Hubble constant inferred from CMB measurement and directly measured in the local Universe. It can be used as a tool to look for systematic errors and/or for new physics. The other one is about neutrino mass ordering. Cosmology, in conjunction with neutrino oscillations results, has already indicated that the mass ordering is hierarchical. A Bayesian approach enables us to determine the odds of the normal vs inverted hierarchy. I will discuss caveats and implications of this.

4 August 2017:Jan Hasenauer (Institute of cComputationalisBiology, Munich)

Abstract: A rigorous assessment of parameter uncertainties iste of Cimportant across scientific disciplines. In this talk, I will introduce profilete of Cmethods [1] for differential equation models. I will review establishedt ptimization-based methods for profile calculation and touch uponte of Cintegration-based approaches [2] and novel hybrid schemes. The properties ofte of Cprofile likelihoods will be discussed and the relation to Bayesian methods forte of Cuncertainty analysis. Finally, I will show a few application examples in thete of Cfield of systems biology [3].

References: [1] Murphy & van der Vaart, J.te of CAm. Stat. Assoc., 95(450): 449-485, 2000. [2] Chen & Jennrich, J. Comput.te of CGraphical Statist., 11(3):714-732, 2002. [3] Hug et al., Math. Biosci., 246(2):te of C293-304, 2013.

talk as pdf

21 July 2017 14:00 New Seminar room at MPA: Frederik -Beaujean (LMU / Universe Cluster)

Abstract: One of the big challenges in astrophysics is the comparison of complex simulations to observations. As many codes do not directly generate observables (e.g. hydrodynamic simulations), the last step in the modelling process is often a radiative-transfer treatment. For this step, the community relies increasingly on Monte Carlo radiative transfer due to the ease of implementation and scalability with computing power. I show a Bayesian way to estimate the statistical uncertainty for radiative-transfer calculations in which both the number of photon packets and the packet luminosity vary. Our work is motivated by the TARDIS radiative-transfer supernova code developed at ESO and MPA by Wolfgang Kerzendorf et al. Speed is an issue, so I will develop various approximations to the exact expression that are computationally more expedient. Beyond TARDIS, the proposed method is applicable to a wide spectrum of Monte Carlo simulations including particle physics. In comparison to frequentist methods, it is particularly powerful in extracting information when the data are sparse but prior information is available.

14 July 2017parse but prior information is avai14:00 MPA room 0 imilian Totzauer (MPPparse but prior information isparse but prior information is avaiavaiMunich)

Abstract: In this talk, I will present the results of abut priglobal Bayesian analysis of currently available neutrino data. Thisbut prianalysis will put data from neutrino oscillation experiments, neutrinolessbut pridouble beta decay, and precision cosmology on an equal footing. I will usebut prithis setup to evaluate the discovery potential of future experiments.but priFurthermore, Bayes factors of the two possible neutrino mass orderingbut prischemes (normal or inverted) will be derived for different prior choices.but priThe latter will show that the indication for the normal mass ordering isbut pristill very mild and is mainly driven by oscillation experiments, while itbut pridoes not strongly depend on realistic prior assumptions or on differentbut pricombinations of cosmological data sets. Future experiments will be shownbut prito have a significant discovery potential, depending on the absolute06: Maxneutrino mass scale, the mass ordering scheme and the achievable background level of the experiments.

21 April 2017 Friday: Vanessa Boehm (MPA)

Abstract: Tomographic lensing measurements offer a powerful probe for time-resolving the clustering of matter over a wide range of scales. Since full, high resolution reconstructions of the matter field from cosmic shear measurements are ill-constrained, they commonly rely on spatial averages and prior assumptions about the noise and signal statistics. I will present a new, Bayesian method to perform such a reconstruction. Our likelihood can take into account individual galaxy contributions, thereby also accounting for their individual redshift uncertainties. As a prior, we use a lognormal distribution which significantly better captures the non-linear properties of the underlying matter field than the commonly used Gaussian prior. After reviewing the algorithm itself, I will show results from testing it on mock data generated from fully non-linear density distributions. These tests reveal the superiority of the lognormal prior over existing methods in regions with high signal-to-noise.

17 Mar 2017 Friday: Ata Metin (AIP Postsdam)

Abstract: In the current cosmological understanding the clustering and dynamics of galaxies is driven by the underlying dark matter budget. I will present a framework to jointly reconstruct the density and velocity field of the cosmological large-scale structure of dark matter. Therefore, I introduce the ARGO code that is a statistical reconstruction method and will focus on the bias description I use to connect galaxy and dark matter density as well as the perturbative description to correct for redshift-space distortions arising from galaxy redshift surveys. Finally I will discuss the results of the application of ARGO on the SDSS BOSS galaxy catalogue.

10 Mar 2017 Friday: Reimar Leike (MPA)

Abstract: This talk addresses two related topics: 1) Axiomatic Mar Mar information theory 2) Simulation scheme construction 1) Bayesian reasoning allows for high-fidelity predictions as welltion thas consistent and exact error quantification. However, in many casestion thapproximations have to be made in order to obtain a result at all, for example when computing predictions about fields which have degrees of freedom for every point in space. How to quantify the error that is introduced through an approximation? This problem can be phrased as n thcommunication task where it is impossible to communicate a full Bayesian probability through n thlimited-bandwidth channel. One can request that an abstract ranking function quantifies how 'embarrassing' it is to communicate a different probability. Surprisingly, from very Bayesian axioms it follows that a unique ranking function exist that is equivalent to the Shannon information loss of the approximation. 2) This ranking function can then be applied to information field dynamics, where one has finite data in computer memory that contains information about an evolving field. A simulations scheme is defined uniquely by requiring minimal information loss. This is demonstrated by a working example and thetion thprospects of this new perspective on computer simulations is discussed.

24 Feb 2017 Friday: Fabrizia Guglielmetti (ESO)

Abstract: Image interpretation is an ill-posed inverse problem, Fridrequiring inference theory methods for a robust solution. Bayesian statistics provides the proper principles of inference to solve the ill-posedness in astronomical images, enabling explicit declaration on relevant information entering the physical models. Furthermore, Bayesian methods require the application of models that are moderately to extremely computationally expensive. Often, the Maximum a Posteriori (MAP) solution is used to estimate the most probable signal configuration (and uncertainties) from the posterior pdf of the signal given the observed data. Locating the MAP solution becomes a numerically challenging problem, especially when estimating a complex objective function defined in an high-dimensional design domain. Therefore, there is the need to utilize fast emulators for much of the required computations. We propose to use Kriging surrogates to speed up optimization schemes, like steepest descent. Kriging surrogate models are built and incorporated in a sequential optimization strategy. Results are presented with application on astronomical images, showing the proposed method can effectively search the global optimum.

talk as pdf

Talks in 2016

2 December 2016 Friday: Steven Gratton (Institute of Astronomy, Cambridge)

Abstract: This talk will introduce a general scheme for computing posteriors in situations in which only a partial description of the data's sampling distribution is available. The method is primarily calculation-based and uses maximum entropy reasoning.

18 November 2016 Friday: David Yu (MPE)

Abstract: We describe the novel paradigm to gamma-ray burst (GRB) location and spectral analysis, BAyesian Location Reconstruction Of GRBsNovem(BALROG). The Fermi Gamma-ray Burst Monitor (GBM) is a gamma-ray photon counting instrument. The observed GBM photon flux is a convolution between the true energy flux and the detector response matrices (DRMs) of all the GBM detectors. The DRMs depend on the spacecraft orientation relative to the GRB location on the sky. Precise and accurate spectral deconvolution thus requires accurate location; precise and accurate location also requires accurate determination of the spectral shape. We demonstrate that BALROG eliminates the systematics of conventional approaches by simultaneously fitting for the location and spectrum of GRBs. It also correctly incorporates the uncertainties in the location of a transient into the spectral parameters and produces reliable positional uncertainties for both well-localized GRBs and those for which the conventional GBM analysis method cannot effectively constrain the position.

talk as pdf

October 2016 Friday: Reinhard Prix (Albert-Einstein-Institut Hannover)

Abstract: Bayesian methods have found a number of uses in the(Albesearch for gravitational waves, starting from parameter-estimation problems (the most obvious application) to detection methods. I will give a short overview of the various Bayesian applications (that I am aware of) in this field, and will then mostly focus on a few selected examples that I'm the most familiar with and that highlight particular challenges or interesting aspects of this approach.

talk as pdf

16 September 2016 Friday: Ruediger Schack (Royal Holloway College, Univ. London, UK)

Abstract: QBism is an approach to quantum theory which is grounded in the personalist conception of probability pioneered by Ramsey, de Finetti and Savage. According to QBism, a quantum state represents an agent's personal degrees of belief regarding the consequences of her actions on her external world. The quantum formalism provides consistency criteria that enable the agent to make better decisions. This talk gives an introduction to QBism and addresses a number of foundational topics from a QBist perspective, including the question of quantum nonlocality, the quantum measurement problem, the EPR (Einstein, Podolsky and Rosen) criterion of reality, and the recent no-go theorems for epistemic interpretations of quantum states.

29 July 2016 Friday: Henrik Junklewitz (Argelander Institute fuer Astronomie, Bonn)

Abstract: Imaging and data analysis become a more and more pressing issue in modern astronomy, withs ver larger and more complex data sets available to the scientist. This is particularly true in radio astronomy, where a number of new interferometric instruments are now available or will be in the foreseeable future, offering unpreceden d data quality but also posing challenges to existing data analysis tools. In this talk, I present the growing RESOLVE package, a collection of newly developed radio interferometric imaging methods firmly based on Bayesian inference and Information field theory. The algorithm package can handle the total intensity image reconstruction of extended and point sources, take multi-frequency data into account, and is in the development stage for polarization analysis as well. It is the first radio imaging method to date that can provide an estimate of the statistical image uncertainty, which is not possible with current standard methods. The presentation includes a theoretical introduction to the inference principles being used as well as a number of application examples.

17 June 2016 Friday: Maksim Greiner (MPA)

Abstract: Tomography problems can be found in astronomy and medicinal imaging. They are complex inversion problems which demand a regularization. I will demonstrate how a Bayesian setup automatically provides such a regularization through the prior. The setup is applied to two realistic scenarios: Galactic tomography of the free electron density and medicinal computer tomography.

talk as pdf

29 April 2016: Kevin H. Knuth (University at Albany (SUNY)

Abstract: A theory of logical inference should be encompassing, applying to any subject about which inferences are to be made his includes problems ranging from the early applications of games of chance, modern applications involving astronomy, biology, chemistry, geology,. Knujurisprudence, physics, signal processing, sociology, and even quantum. Kn echanics. This talk focuses on how the theory of inference has evolved in. Knurecent history: expanding in scope, solidifying its foundations, deepening its. Knuinsights, and growing in calculational power.

talk as pdf

28 April 2016: Additional talk by Kevin H. Knuth at Excellence Cluster

talk as pdf4 April 2016: James Berger (Duke Univ.)

Abstract: Issues of multiplicity in testing are increasingly being encountered in a wide range of disciplines, as the growing complexity of data allows for consideration of a multitude of possible tests (e.g., look-elsewhere effects in Higgs discovery, Gravitational wave discovery, etc). Failure to properly adjust for multiplicities is one of the major causes for the apparently increasing lack of reproducibility in science. The way that Bayesian analysis does (and sometimes does not) deal with multiplicity will be discussed. Different types of multiplicities that are encountered in science will also be introduced, along with discussion of the current status of multiplicity control (or lack thereof).

talk as pdf

4 March 2016: Stephan Hartmann (LMU, Mathematical Philosophy Dept.)

Abstract: Modeling how to learn indicative conditional ('if A then B') has been a major challenge for Bayesian epistemologists. One proposal to meet this challenge is to construct the posterior probability distribution by minimizing the Kullback-Leibler divergence between the posterior probability distribution and the prior probability distribution, taking the learned information as a constraint (expressed as a conditional probability statement) into account. This proposal has been criticized in the literature based on several clever examples. In this talk, I will revisit four of these examples and show that one obtains intuitively correct results for the posterior probability distribution if the underlying probabilistic models reflect the causal structure of the scenarios in question.

talk as pdf

19 February 2016: Allen Caldwell (MPP)

Abstract: There is considerable confusion in our community concerning the definition and meaning of frequentist confidence intervals and Bayesian credible intervals. We will review how they are constructed and compare and contrast what they mean and how to use them. In particular for frequentist intervals, there are differentnext popular constructions; they will be defined and compared and with the Bayesian intervals on examples taken from typical situations faced in experimental work.

talk as pdf

18 February 2016: Frederick Beaujean (LMC)

Abstract: There is considerable confusion among physicists regarding the two main interpretations of probability theory. I will review the basics and then discuss some common mistakes to clarify which questions can be asked and how to interpret the numbers that come out.

22 January 2016: Daniel Pumpe (MPA)

Abstract: Stochastic differential equations (SDE) describe well many physical, biological and sociological systems, despite the simplification often made in their description. Here the usage of simple SDE to characterize and classify complex dynamical systems is proposed within a Bayesian framework. To this end, the algorithm 'dynamic system classifier' (DSC) is developed. The DSC first abstracts training data of a system in terms of time dependent coefficients of the descriptive SDE. This then permits thew DSC tow identify unique features within the training data. For definiteness we restrict ourselves to oscillation processes with a time-wise varying frequency w(t) and damping factor y(t). Although real systems might be more complex, this simple oscillating SDE with time-varying coefficients can capture many of their characteristic features. The w and y timelines represent the abstract system characterization and permit the construction of efficient classifiers. Numerical experiments show that the classifiers perform well even in the low signal-to-noise regime.

talk as pdf

Talks in 2015

Jan Leike (Australian National University)

Abstract: learning that studies algorithms that learn to act in an unknown environment through trial and error; the goal is to maximize a numeric reward signal. We introduce the Bayesian RL agent AIXI that is based on the universal (Solomonoff) prior. This prior is incomputable and thus our focus is not on practical algorithms, but rather on the fundamental problems with the Bayesian approach to RL and potential solutions.

talk as pdf

11 December 2015: Will Handley (Kavli Institute, Cambridge)

Abstract: PolyChord is a novel Bayesian inference tool for high-dimensional parameter estimation and model comparison. It represents the latest advance in nested sampling technology, and is the natural successor to MultiNest. The algorithm uses John Skilling's slice sampling, utilising a slice-sampling Markov-Chain-Monte-Carlo approach for the generation of new live points. It has cubic scaling with dimensionality, and is capable of exploring highly degenerate multi-modal distributions. Further, it is capable of exploiting a hierarchy of parameter speeds present in many cosmological likelihoods. In this talk I will give a brief account of nested sampling, and the workings of PolyChord. I will then demonstrate its efficacy by application to challenging toy likelihoods and real-world cosmology problems.

talk as pdf PolyChord website

27 November 2015: Hans Eggers (Univ. Stellenbosch; TUM; Excellence Cluster)

Hans Eggers and Michiel de Kock

Abstract: Computational approaches to model comparison are often necessary, but they are expensive. Analytical Bayesian methods remain useful as benchmarks and signposts. While Gaussian likelihoods with linear parametrisations are usually considered a closed subject, more detailed inspection reveals successive layers of issues. In particular, comparison of models with parameter spaces of different dimension inevitably retain an unwanted dependence on prior metaparameters such as the cutoffs of uniform priors. Reducing the problem to symmetry on the hypersphere surface and its radius, we formulate a prior that treats different parameter space dimensions fairly. The resulting "r-prior" yields closed forms for the evidence for a number of choices, including three priors from the statistics literature which are hence just special cases. The r-prior may therefore point the way towards a better understanding of the inner mathematical structure which is not yet fully understood. Simple simulations show that the current interim formulation performs as well as other model comparison approaches. However, the performance of the different approaches varies considerably, and more numerical work is needed to obtain a comprehensive picture.

talk as pdf

16 October 2015: Christian Robert (Universite Paris-Dauphine)

Abstract: Introduced in the late 1990's, the ABC method can be considered from several perspectives, ranging from a purely practical motivation towards handling complex likelihoods to non-parametric justifications. We propose here a different analysis of ABC techniques and in particular of ABC model selection. Our exploration focus on the idea that generic machine learning tools like random forests (Breiman, 2001) can help in conducting model selection among the highly complex models covered by ABC algorithms. Both theoretical and algorithmic output indicate that posterior probabilities are poorly estimated by ABC. I will describe how our research for an alternative first led us to abandoning the use of posterior probabilities of the models under comparison as evidence tools. As a first substitute, we proposed to select the most likely model via a random forest procedure and to compute posterior predictive performances of the corresponding ABC selection method. It is only recently that we realised that random forest methods can also be adapted to the further estimation of the posterior probability of the selected model. I will also discuss our recommendation towards sparse implementation of the random forest tree construction, using severe subsampling and reduced reference tables. The performances in term of power in model choice and gain in computation time of the resulting ABC-random forest methodology are illustrated on several population genetics datasets.

talk as pdf

29 September 2015: John Skilling (MEDC)

Abstract: Tomography measures the density of a body (usually by its opacity to X-rays) along multiple lines of sight. From these line integrals, we reconstruct an image of the density as a function of position, p(x). The user then interprets this in terms of material classification (in medicine, bone or muscle or fat or fluid or cavity, etc.).

Opacity data -> invert -> Density image -> interpret -> Material model

Bayesian analysis does not need to pass through the intermediate step of density: it can start directly with a prior probability distribution over plausible material models. This expresses the user's judgement about which models are thought plausible, and which are not.

Opacity data -> Bayes -> Material model

That prior is modulated by the data (through the likelihood function) to give the posterior distribution of those images that remain plausible after the data are used. Usually, only a tiny proportion O(e- size of dataset) of prior possibilities survives into the posterior, so that Bayesian analysis was essentially impossible before computers. Even with computers, direct search is impossible in large dimension, and we need numerical methods such as nested sampling to guide the exploration. But this can now be done, easily and generally. The exponential curse of dimensionality is removed. Starting directly from material models enables data fusion, where different modalities (such as CT, MRI, ultrasound) can all be brought to bear on the same material model, with results that are clearer and more informative than any individual analysis. The aim is to use sympathetic prior models along with modern algorithms to advance the state of the art.

talk as pdf

24 July 2015: Maria Bergemann (MPIA Heidelberg)

Abstract: Spectroscopic interstellar observations have shaped our understanding of stars and galaxies. This is because spectra of stars are the only way to determine their chemical composition, which is the fundamental resource to study cosmic nucleosynthesis in different environments and on different time-scales. Research in this field has never been more exciting and important to astronomy: the ongoing and future large-scale stellar spectroscopic surveys are making gigantic steps along the way towards high-precision stellar, Galactic, and extra-galactic archaeology. However, the data we extract from stellar spectra are not strictly-speaking 'observational'. These data - fundamental parameters and chemical abundances heavily rely upon physical models of stars and satistical methods to compare the model predictions with raw observations. I will describe our efforts to provide the most realistic models of stella spectra, based upon 3D non-local thermodynamic equilibrium physics, and the new Bayesian spectroscopy approach for the quantitative analysis of the data. I will show how our new methods and models transform quantitative spectroscopy, and discuss implications for stellar and Galactic evolution.

talk as pdf

17 July 2015: Igor Ovchinnikov (UCLA)

Abstract: Prominent long-range phenomena in natural dynamical systems such as the 1/f and flicker noises, and the power-law statistics observed, e.g., in solar flares, gamma-ray bursts, and neuroavalanches are still not fully understood. A new framework sheds light on the mechanism of emergence of these rich phenomena from the point of view of stochastic dynamics of their underlying systems. This framework exploits an exact correspondence relation of any stochastic differential equation (SDE) with a dual (topological) supersymmetric model. This talk introduces into the basic ideas of the framework. It will show that the emergent long-range dynamical behavior in Nature can be understood as the result of the spontaneous breakdown of the topological supersymmetry that all SDE's possess. Surprisingly enough, the concept of supersymmetry, devised mainly for particle physics, has direct applicability and predictive power, e.g., over the electrochemical dynamics in a human brain. The mathematics of the framework will be detailed in a separate lecture series on "Dynamical Systems via Topological Field Theory" at MPA from July 27th to July 31st.

talk as pdf

26 June 2015: Volker Schmid (Statistics Department of LMU Munich)

Abstract: Compartment models are used as biological model_for the analysis of a variety of imaging data. Perfusion imaging, for example, aims to investigate the kinetics in human tissue in vivo via a contrast agent. Using a series of images, the exchang ntrast agent between different compartment in the tissue over time is of interest. Using the analytic solution of system of differential equations, nonlinear parametric functions are gained and can be fitted to the data at a voxel level. However, the estimation of the parameters is unstable, in particular in models with more compartments. To this end, we use Bayesian regularization to gain stable estimators along with credible intervals. Prior knowledge is determined about the context of the local compartment models. Here, context can refer to either spatial information, potentially including edges in the tissue. Additionally patient or study specific information can be used, in order to develop a comprehensive model for the analysis of a set of images at once. I will show the application of fully Bayesian multi-compartment models in dynamic contrast-enhanced magnetic resonance imaging (DCE-MRI) and fluorescence recovery after photo bleaching (FRAP) microscopy. Additionally, I will discuss some alternative non-Bayesian approaches using the same or similar prior information.

19 June 2015: Emille Ishida (MPA)

Abstract: Approximate Bayesian Computation (ABC) enables parameter inference for complex physical systems in cases where the true likelihood function is unknown, unavailable, or computationally too expensive. It relies on the forward simulation of mock data and comparison between observed and synthetic catalogues. In this talk I will go through the basic principles of ABC and show how it has been used in astronomy recently. As a practical example, I will present ``cosmoabc'', a Python ABC sampler featuring a Population Monte Carlo variation of the original ABC algorithm, which uses an adaptive importance sampling scheme.

talk as pdf, Paper: Ishida et al, 2015, Code, Code, Documentation

8 May 2015: Allen Caldwell (MPP)

Abstract: Deciding whether a model provides a good description of data is often based on a goodness-of-fit criterion summarized by a p-value. Although there is considerable confusion concerning the meaning of p-values, leading to their misuse, they are nevertheless of practical importance in common data analysis tasks. We motivate their application using a Bayesian argumentation. We then describe commonly and less commonly known discrepancy variables and how they are used to define p-values. The distribution of these are then extracted for examples modeled on typical data analysis tasks, and comments on their usefulness for determining goodness-of-fit are given. (based on paper by Frederik Beaujean, Allen Caldwell, Daniel Kollar, Kevin Kroeninger.)

talk as pdf

24 April 2015: Udo von Toussaint (IPP)

Abstract: The quantification of uncertainty for complex simulations is of increasing importance. However, standard approaches like Monte Carlo sampling become infeasible quickly. Therefore Bayesian and non-Bayesian probabilistic uncertainty quantification methods like polynomial chaos (PC) expansion methods or Gaussian processes have found increasing use over the recent years. This presentation focuses on the concepts and use of non-intrusive collocation methods for the propagation of uncertainty in computational models using illustrative examples as well as real-world problems, ie. the Vlasov-Poisson equation with uncertain inputs.

27 February 2015: Maximilian Imgrund (USM/LMU Munich and MPIfR Bonn)

Abstract: Pulsars' extremely predictable timing data is used to detect signals from fundamental physics such as the general theory of relativity.To fit for actual parameters of interest, the rotational phase of these compact and fast rotating objects is modeled and then matched with observational data by using the times of arrival (ToAs) of av-certain rotational phase tracked over years of observations. While there exist Bayesian methods to infere the parameters' pdfs from the ToAs, the standard methods to generate these ToAs relies on simply averaging the data received by the telescope. Our novel Bayesian method acting on the single pulse level both may give more precise results and a more accurate error estimation.

30 January 2015: Torsten Ensslin (MPA)

Abstract: How to optimally describe an evolving field on a finite computer? How to assimilate measurements and statistical knowledge about a field into a simulation? Information field dynamics is a novel conceptual framework to address such questions from an information-theoretical perspective. It has already proven to provide superior simulation schemes in a number of applications.

Talks in 2014

19 December 2014: Max Knoetig (Institute for Particle Physics, ETH Zuerich)

Abstract: Discusses the on/off problem that consists of two counting measurements, one with and one without a possible contribution from a signal process. The task is to infer the strength of the signal; e.g., of a gamma-ray source. While this sounds pretty basic, Max will present a first analytical Bayesian solution valid for any count (incl. zero) that supersedes frequentist results valid only for large counts. The assumptions he makes have to be reconciled with our intuition of the foundations of Bayesian probability theory.

talk as pdf

24 October 2014: Frederik Beaujean (LMU)