|

|  |

Recent progress in observational cosmology has established a standard

model for the material content of the Universe, and its initial

conditions for structure formation 300000 years after the Big Bang.

Most of the mass in the Universe (~85%) consists of dark matter, an as

of yet unidentified weakly interacting elementary particle. Initial

fluctuations in this mass component, seeded by an early inflationary

epoch, are amplified by gravity as the universe expands, and

eventually collapse to form the galaxies we see today. To model this

highly non-linear and intrinsically three-dimensional process, the

matter fluid can be represented by a collisionless N-body system that

evolves under self-gravity. However, it is crucial to make the number

of particles used in the simulation as large as possible in order to

model the universe faithfully.

Scientists at the Max-Planck-Institute for Astrophysics,

together with collaborators in the international Virgo consortium,

were able to carry out a new simulation of this kind using an

unprecedentedly large particle number of more than 10 billion. This is

about an order of magnitude larger than the largest computations

carried out in the field thus far and significantly exceeds the

long-term growth rate of cosmological simulations, a fact that

inspired the name "Millennium Simulation" for the project. This

progress has been made possible by important algorithmic improvements

in the employed simulation code, and the high degree of

parallelization reached with it, allowing the computation to be done

efficiently on a 512 processor partition of the IBM p690 supercomputer

of the Computing Center of the Max-Planck Society (RZG). Still, the

computational challenge of the project proved to be formidable. Nearly

all of the aggregated physical memory of 1 TB available on the

massively parallel computer had to be used, and the analysis of the

~20 TB of data produced by the simulation required innovative

processing methods as well.

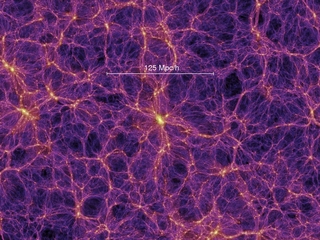

The simulation volume is a periodic box of 500 Mpc/h on a side, giving

the particles a mass of 8.6 x 108/h solar masses, enough to represent

dwarf galaxies by about a hundred particles, galaxies like the Milky

Way by about a thousand, and the richest clusters of galaxies with

several million. The top panel of Figure 1 gives a visual impression

of the dark matter structure on large scales at the present time. The

Millennium simulation shows a rich population of halos of all sizes,

which are linked with each other by dark matter filaments, forming a

structure which has become known as "Cosmic Web". The spatial

resolution of the simulation is 5 kpc/h, available everywhere in the

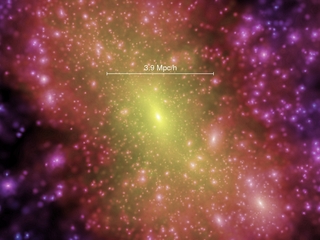

simulated volume. The resulting large dynamic range of 105 per

dimension in 3D allows extremely accurate statistical

characterizations of the dark matter structure of the universe,

including the resolution of dark matter substructures within

individual halos, as seen in the lower panel of Figure 1, where we

zoom in on one of the hundreds of rich galaxy clusters formed in the

simulation. In Figure 2, we show the non-linear halo mass function of

the simulation at different times, serving as an example for the

exquisite accuracy with which key cosmological quantities can be

measured from the simulation.

An important feature of the new simulation is that it gives an

essentially complete inventory of all luminous galaxies above about a

tenth of the characteristic galaxy luminosity. This aspect is

particularly important for constructing a new generation of

theoretical mock galaxy catalogues, which for the first time allow a

direct comparison with observational data sets on an "equal footing",

because the Millennium simulation has a good enough mass resolution to

yield a representative sample of galaxies despite covering a volume

comparable to the large observational redshift surveys that have

become available recently. The galaxy data obtained for the

Millennium simulation will be stored in a "theoretical virtual

observatory", allowing queries similar to those applied to large

observational databases. The large volume of the Millennium Simulation

is also crucial for studying rare objects of low space density, such

as rich clusters of galaxies, or the first luminous quasars at high

redshift. At the same time, the high dynamic range of the simulation

allows accurate theoretical predictions for effects of strong and weak

gravitational lensing, and it allows the study of fluctuations induced

in the cosmic microwave background by the time-varying gravitational

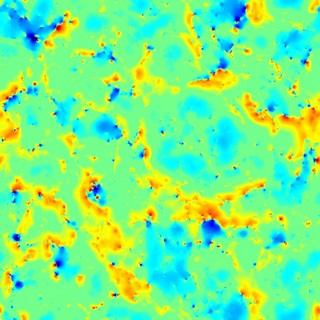

potential, as illustrated in the Figure 3. As cosmology enters a

"precision era", large computer simulations like the Millennium run

become ever more important to account for the full complexity of

cosmic structures, and to provide sensitive tests of our current

theoretical understanding.

Volker Springel

Further information:

Virgo Consortium Virgo Consortium

Virgo web page at the MPI for Astrophysik Virgo web page at the MPI for Astrophysik

A. Jenkins, C.S. Frenk, S.D.M. White, et al., "The mass function of dark

matter halos", Mon. Not. R. Astron. Soc., 321, 372 (2001)

V. Springel, N. Yoshida, S.D.M. White, "GADGET: a code for collisionless

and gasdynamical cosmological simulations", New Astronomy, 6, 79 (2001)

V. Springel, S.D.M. White, G. Tormen, G. Kauffmann, "Populating a cluster

of galaxies: Results at z=0", Mon. Not. R. Astron. Soc., 328, 726 (2001)

|